The intersection of artificial intelligence (AI) and musical acoustics has led to innovative applications, particularly in the realm of instrument classification based on timbre. Timbre refers to the unique quality of a sound that distinguishes it from other sounds of the same pitch and volume. It encompasses characteristics such as tone color, harmonics, and attack-decay profiles, making it a crucial aspect of musical acoustics. Leveraging AI for instrument classification based on timbre involves using computational methods to analyze and identify the distinct sonic signatures of different instruments.

One of the primary applications of AI in this context is machine learning, a subset of AI that focuses on enabling computers to learn patterns and make decisions without explicit programming. In the case of timbre-based instrument classification, machine learning algorithms are trained on large datasets of audio samples from various instruments. These algorithms learn to recognize patterns in the acoustic features of different instruments and use this knowledge to classify new, unseen samples.

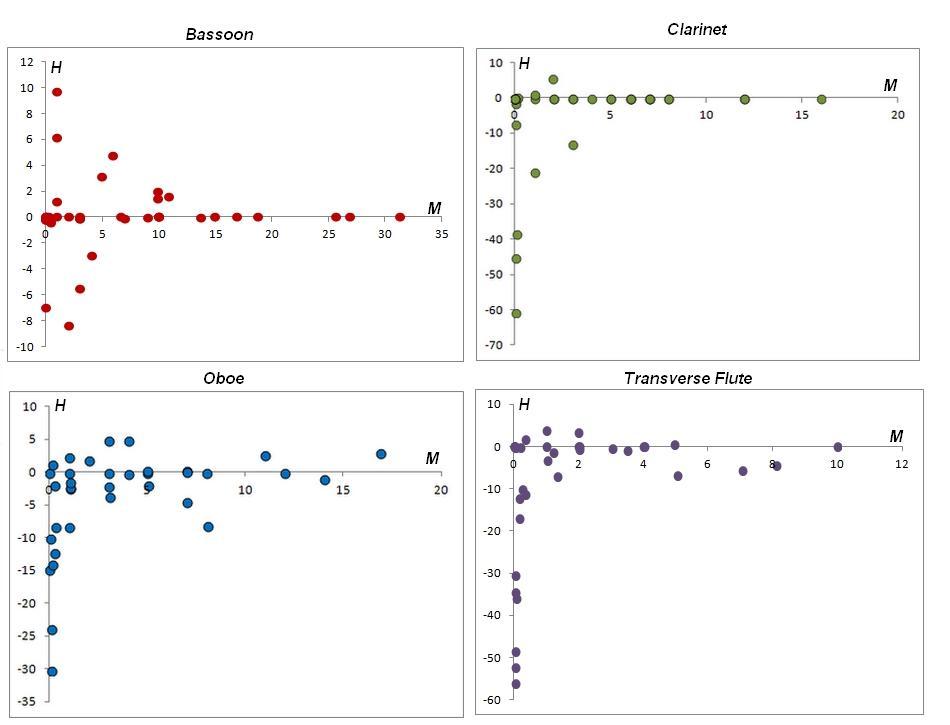

Feature extraction is a key component of the process, where relevant information about the timbral characteristics of each instrument is distilled from the audio signals. This can include aspects like spectral content, attack and release times, and frequency distribution. Machine learning models, often based on techniques such as deep learning or support vector machines, then use these extracted features to classify and differentiate between instruments.

One notable advantage of using AI for instrument classification is its ability to handle large and complex datasets efficiently. Traditional methods of timbre analysis and classification may be limited in their ability to process vast amounts of data, but AI algorithms can scale effectively, allowing for more comprehensive and accurate models.

Our approach

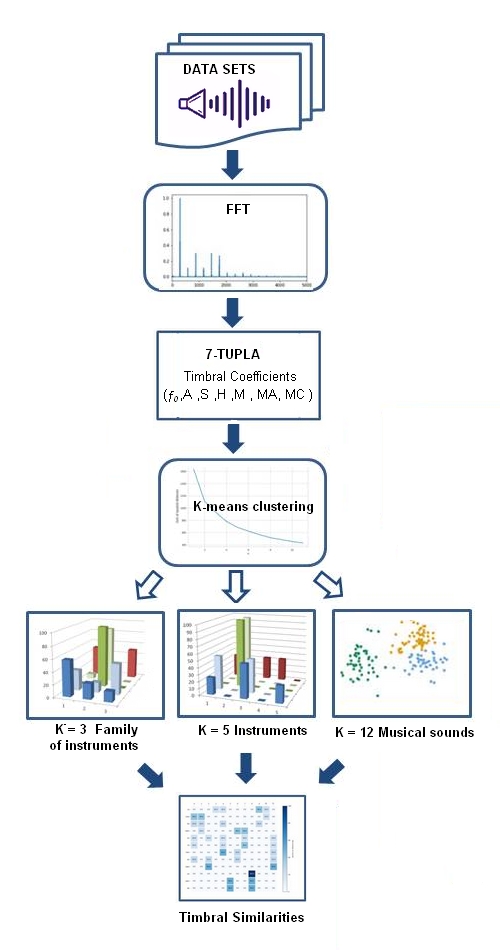

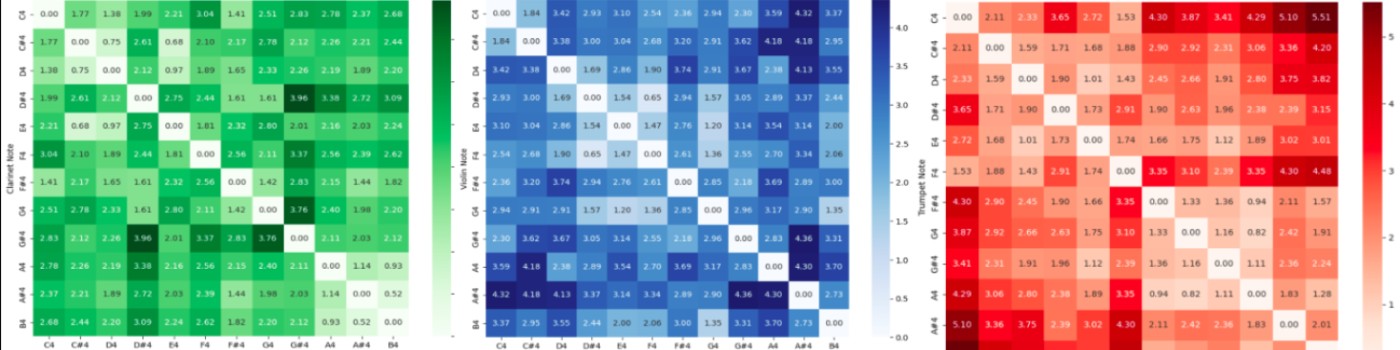

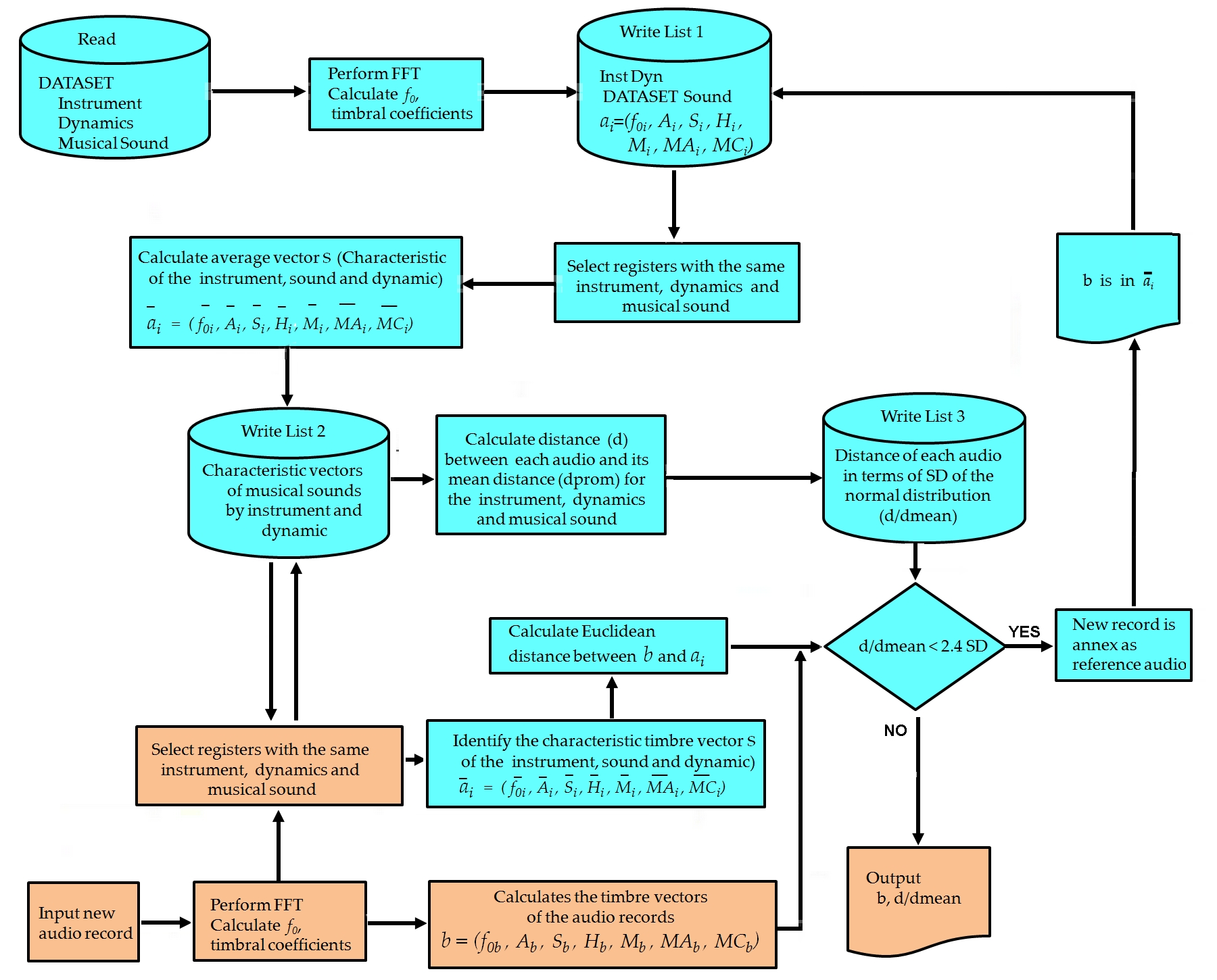

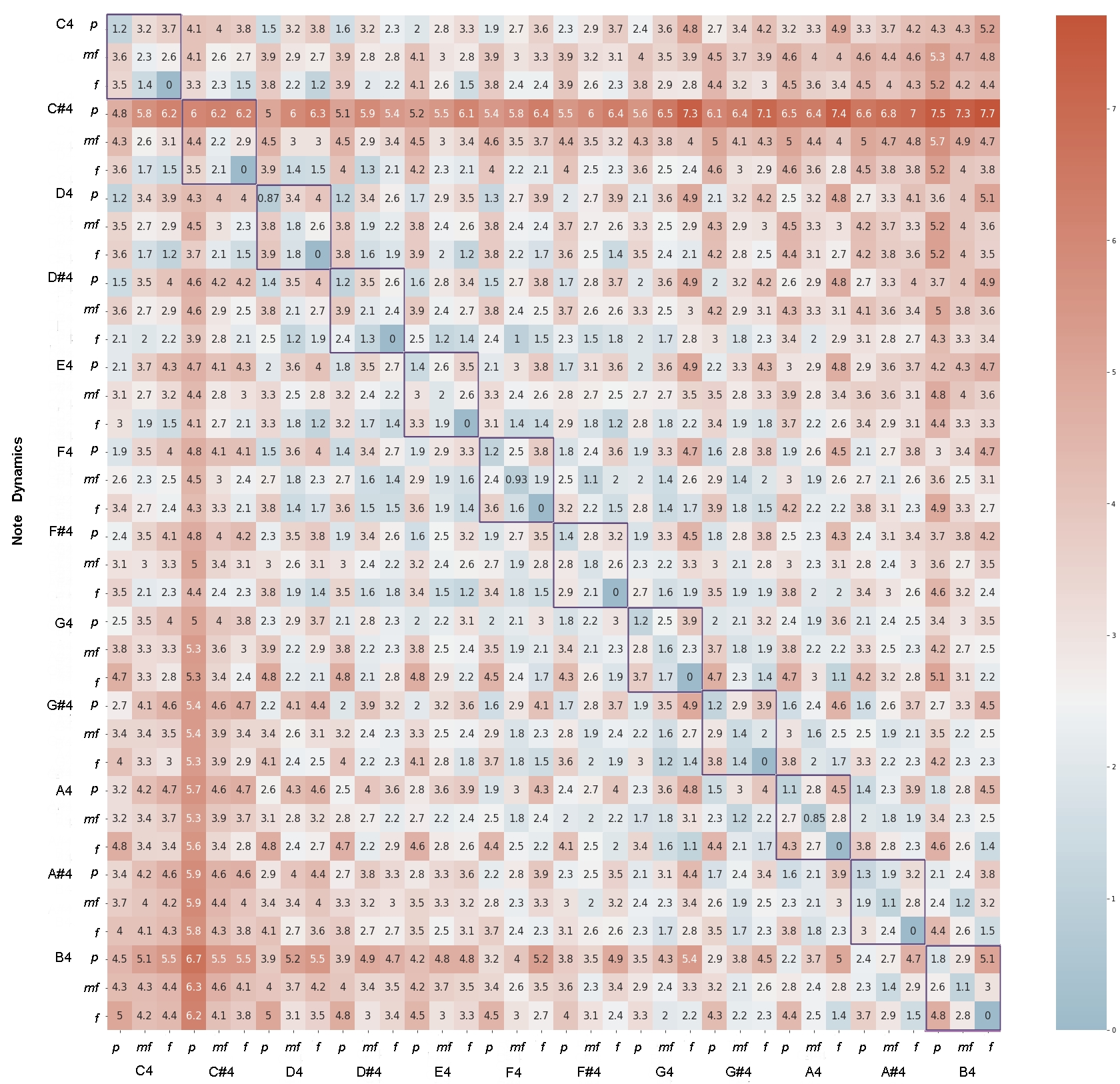

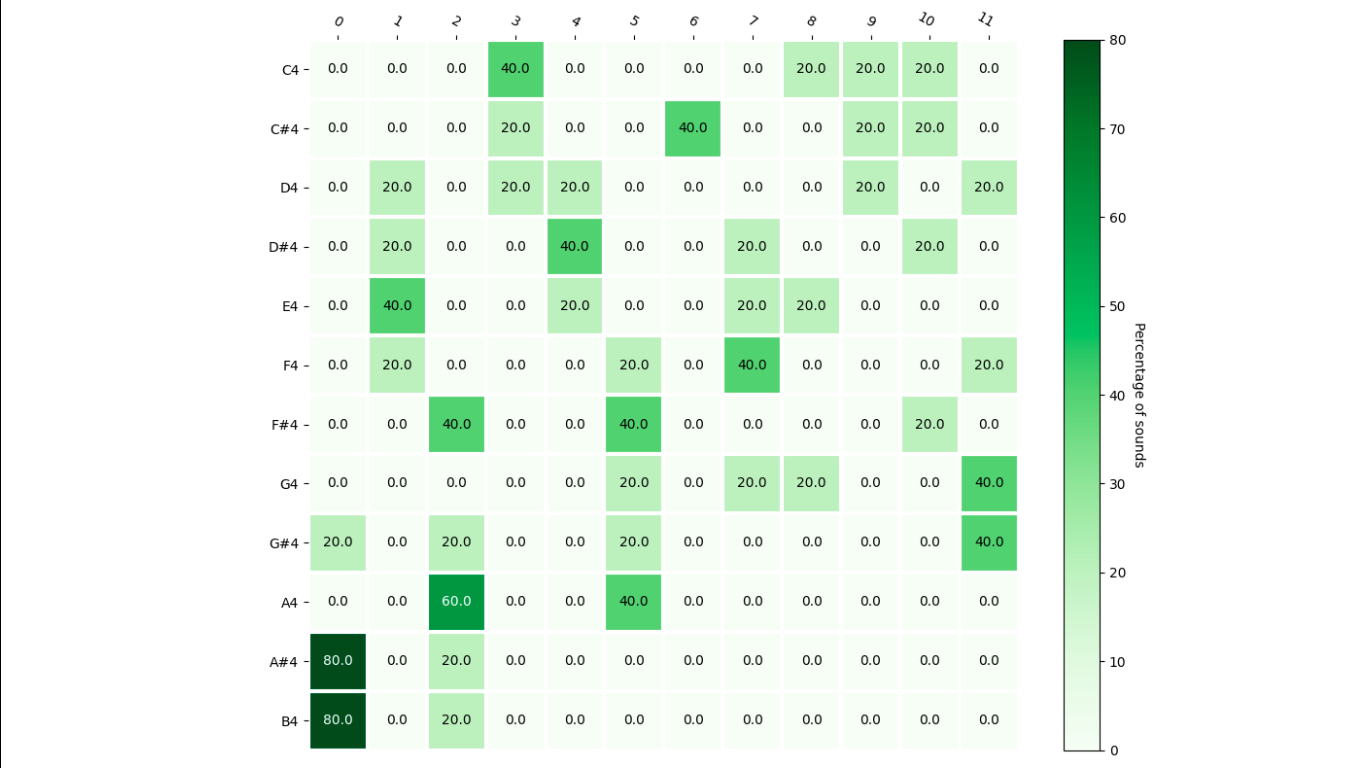

My research work focuses on the use of FFT-Acoustic descriptors and the application of classification and clustering algorithms for the recognition of specific patterns associated with musical timbre. Through these algorithms we have been able to classify musical notes, dynamics, musical instruments and instrument families from a large set of audios, specifically from the ‘TinySol’ and ‘Good-sounds’ libraries. In addition, we have found groups of importance between the categories of different musical instruments and different musical notes for the study of timbral similarity, through clustering algorithms (See publications section).